Case Studies

IoT solution for high throughput, low latency big data architectures in AWS

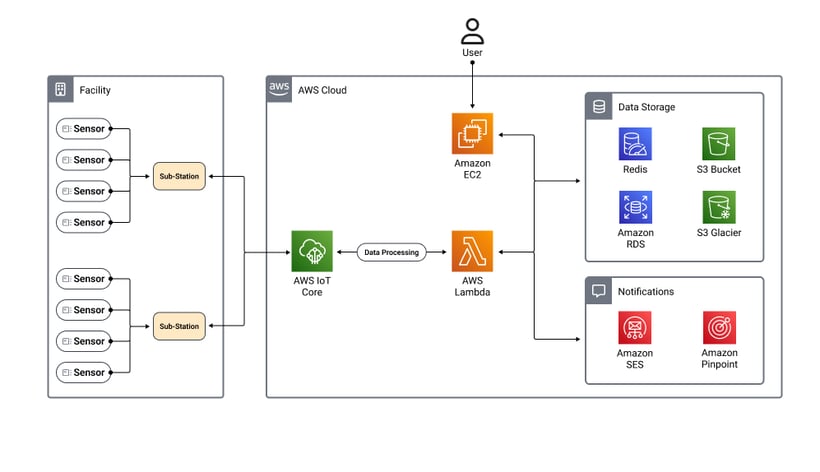

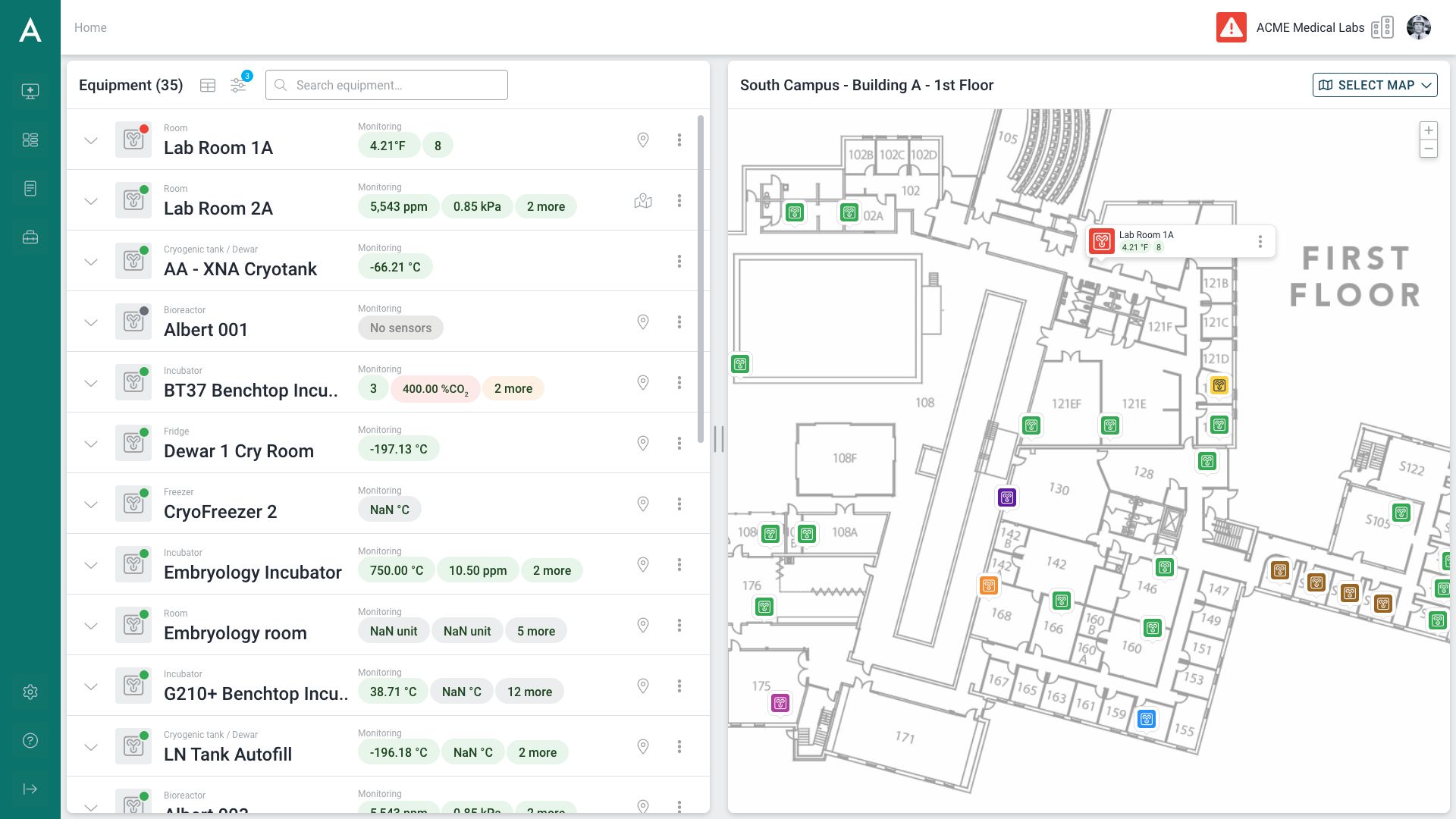

This case study explores the solution devised by ChaiOne for a global client requiring the processing of extensive and sophisticated IoT data from a multitude of distributed devices in real-time. The project hinged on an AWS-based system and encompassed the intricate handling of complex JSON data packets. Our approach integrated two applications: a robust data processing engine and a dynamic n-tier web application for asset management and UI data rendering.

The challenge

We faced the daunting task of managing data from several thousand devices, each transmitting complex JSON packets at an average rate of 6 messages per minute. The project's complexity lay in storing every record for auditing, displaying data in near real-time on browser charts, and raising real-time alerts within the same UI, all within a multi-tenant environment. This translated to handling almost 10 million records daily, amounting to more than 3 billion annually.

Comprehensive solution

Our solution was three-pronged, focusing on storage, alert management, and visualization/reporting.

Data storage

We employed AWS S3 for initial data storage, owing to its cost-effectiveness. Despite its slower read rate, we optimized it through strategic data partitioning and the use of AWS Lambda for pre-processing. The complex JSON structures necessitated a two-tier storage system, with raw data stored in one partition and processed data in another. This dual system allowed for efficient data retrieval and performance optimization.

Alert management

Real-time alert management was critical. We leveraged Redis Cache for storing configuration data and alarm states. This setup enabled us to process large volumes of data efficiently and update the UI in real-time, significantly reducing the load on the system and human resources.

Visualization and reporting

We optimized S3's read rate by aggregating data packets into n-day chunks. This approach, along with nightly data transformation routines, facilitated rapid access to historical data, enhancing the UI's data rendering speed. To manage costs, we archived original granular records in Glacier, reserving S3 for more frequently accessed data.

Result and impact

Our innovative solution adeptly processed over 242 million IoT records in the first month with an average of 8.6 million records per day, demonstrating our ability to handle high-volume data with precision and efficiency. This system not only enabled our client to process a vast number of sensor data but also significantly reduced the load on their systems and human resources. By implementing this robust architecture, we unlocked substantial financial and productivity benefits for the client, marking a significant achievement in the realm of AWS Big Data IoT processing.

Services provided:

Software Engineering

No video selected

Select a video type in the sidebar.